SEO (Search Engine Optimisation) is very popular these days. There are quite a lot of web-based software such as Google analytics, Google webmasters etc. Many companies live on SEO, for example, the commerical website www.analyticsseo.com offers a web-based GUI that can be used to do some SEO.

The search engines have their own algorithms which will place useful and meaningful web-pages at a higher rank. They will also punish useless, spam websites. In order to satisfy the search engines, the following need to be taken care of.

1. Site Content must be useful, spam free and contain original contents.

Search engines do not like copycats. If a website automatically copys the contents from other websites, it will be given zero page rank. That is why if you move your domain, it is better to do a 301 redirect [continue reading]. Also, if a page/post content is less than 150 words, it may be treated useless. Some websites use scripts to insert hot-keywords to every posts and thus in short time, the page ranks are increasing but sooner or later, when the search engines detect that the users leave the page immediately because they find them useless, the site will be punished.

Also, it is recommended to have the site updated more frequently (e.g one post every three days rather than 10 posts every day less frequently) with novel contents, which the search engines do like.

2. Compression

Page speed is one of the most important factors that is considered by the search engines. If a site speed is very slow, it will surely not be favored by the search engines. Using compressions such as gzip will shorten the downloading to 2x-3x times faster. You could use this link to test if your website is gzipped.

3. Meta data (keywords, description)

Keywords and descriptions are two most important meta data, which are included in the HTML meta tags. Add them if these two are not found in HTML head section. You should always:

- Choose the keywords wisely.

- Make the description concise and precise to reflect your site content.

- Avoid duplicated keywords.

4. robots.txt

robots.txt is a plain text file located at the root directory of you website which tells the search engines which files/directories to index, and which are not. The most simple example is to have the following two lines to allow all access from the search engines.

User-agent: * Disallow:

while the following is the opposite, that tells the search engines stay out of the site.

User-agent: * Disallow: /

You can have multiple allow and disallow to constraint the search engines, which may shorten the indexing time. In case that you don’t have permission to the root directory, you can put the meta bots tag in the HTML header section. For example, the following grants all access.

1 2 | meta content="index, follow" name="robots" meta content="index, follow, snippet, archive" name="googlebot" |

meta content="index, follow" name="robots" meta content="index, follow, snippet, archive" name="googlebot"

Another example can be found [Here], which is the robots.txt on my website. You can also add sitemaps in the file to help the search engines to crawel the site.

5. Caching

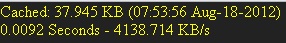

Caching the sites improve the speed a lot by reading from static HTML files rather than dynamic PHP pages [continue reading and continue reading]. This in most cases will reduce the server response time/workload and therefore the server can handle more client requests at the same time. Take my site for example, if you see this one the top-left of the HTML page, congradulations, you have reached a static HTML cached page which might not reflect the latest content.

6. Shrink Javascript/CSS files

Javascript/CSS files are less relevant to the HTML text presentation i.e. the search engines may not care how the site looks like. In most cases, these files are only for nice looking and user-interactions. Loading these files into the browser takes time and do not benefit the HTML contextual results. Try to keep these files as small as possible, which will increase the page load speed. For example, you can compress these files by renaming *.js or *.css to *.php, which will use PHP to compress the output (e.g. using gzip). Alternatively, you can include all these files together in PHP by include or readfile, which will reduce the browser requests to external files. For example,

1 2 3 | ob_start('ob_gzhandler') readfile('a.js'); readfile('b.css'); |

ob_start('ob_gzhandler')

readfile('a.js');

readfile('b.css');You can also use the following to remove the comments and white spaces, which reduces the file size and increase the page loading speed.

7. Remove white spaces/comments

White spaces/comments/new lines/ are not important to search engines because they are not human, they do not need these to comprehend your site. Instead, adding too many of these will slow down the analysis. The following is a PHP function to remove the comments/new lines to CSS files, you can easily adapt it to HTML comments, and use it before outputs are sent to the browser, e.g. filtering.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | function compress($buffer) { /* remove comments */ $buffer = preg_replace('!/\*[^*]*\*+([^/][^*]*\*+)*/!', '', $buffer); /* remove tabs, spaces, newlines, etc. */ $buffer = str_replace(array( "\r\n", "\r", "\n", "\t", ' ', ' ', ' '), '', $buffer); return $buffer; } ob_start('ob_gzhandler'); // compress using gzip ob_start('compress'); // remove white spaces and comments |

function compress($buffer)

{

/* remove comments */

$buffer = preg_replace('!/\*[^*]*\*+([^/][^*]*\*+)*/!', '',

$buffer);

/* remove tabs, spaces, newlines, etc. */

$buffer = str_replace(array(

"\r\n", "\r", "\n", "\t", ' ', ' ', ' '),

'', $buffer);

return $buffer;

}

ob_start('ob_gzhandler'); // compress using gzip

ob_start('compress'); // remove white spaces and comments8. Remove Duplicated Pages

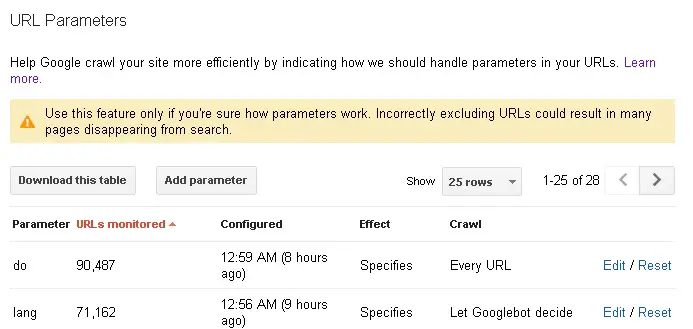

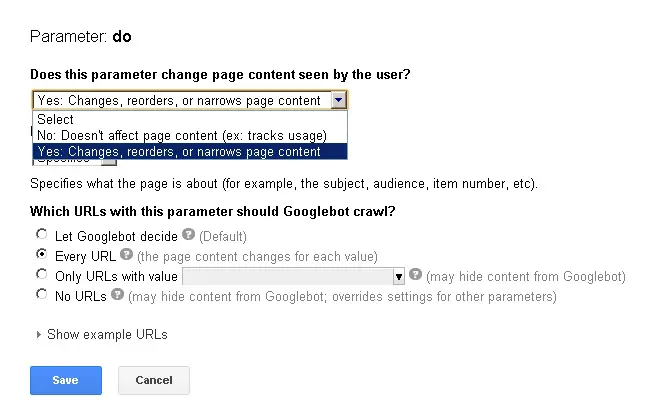

If the site content is generated using dynamic programming languages such as PHP, you probably will have met lots of pages that output different contents depending on the QUERY_STRING get parameters. For example, some have $_GET[‘lang’] to specify translation, some have $_GET[‘skin’] to apply different templates etc. Some of these GET parameters have effects on the HTML output but some are not so relevant to the contextual stuffs. The skin is one of the example. You can help search engines to stop indexing similar pages (with differences such as different HTML templates) by telling them on Google Webmaster.

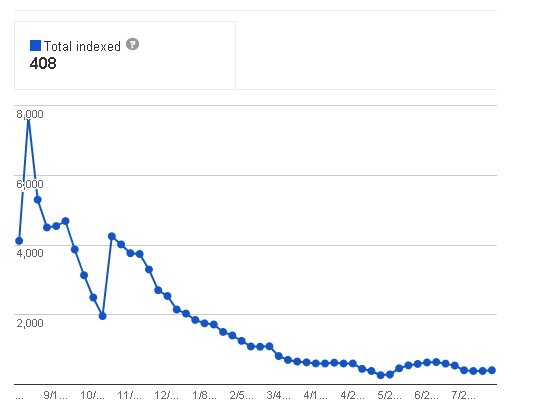

Take my site for example, before I didn’t know this, therefore, the combination of the URL parameters was huge exponentially about a year ago. The google bots crawel lots of duplicated pages, which was inefficient in indexing my site. You can see after tuning the URL parameters, the pages to index are reduced significantly.

9. Sitemaps

Sitemaps are an important method to tell the search engines the links of a certain website. The sitemaps can be in formats of XML, X/HTML, or plain text. It can be also included in the robots.txt as stated before. In Google Webmasters, you can manually submit the sitemaps for indexing. It is advised to have at least a sitemap per website. Having sitemaps will be considered less a spam by some search engines.

10. Cleaner HTML / Reduce Warnings/Errors in Scripts

The scripts including HTML should be well-formated, free of errors or warnings. For example, the HTML tags not properly matched will mislead the indexing results. The warnings/errors can be looked-up in the /htdocs/logfiles i.e. if you are using Linux+Apache server. Use latest technology such as HTML5 if possible, because it provides a cleaner HTML structure. It is advised to use W3C validators (such as RSS, XML, XHTML) to validate the pages.

11. Canonical URLs and nofollow tags

The canonical URLs are used to specify similar pages, thus the search engines will not add weights to page ranks for these out-going links. This is a useful method to prevent spam posts (e.g. many use auto spam bots to leave posts to guestbooks etc so their spam sites have more incoming links, which will increase their page ranks). Adding rel = ‘canonical’ in the link tag, and place it in the head section tells the google “Of all these pages with identical content, this page is the most useful. Please prioritize it in search results.”

Adding rel = ‘nofollow’ tells the search engines not to follow the specific links. Google then won’t transfer any page rank scores to these links. Alternatively, you can place the following in the head section of HTML

1 2 | meta name='robots' content='noindex, nofollow' meta name='googlebot' content='noindex, nofollow, noarchive' |

meta name='robots' content='noindex, nofollow' meta name='googlebot' content='noindex, nofollow, noarchive'

—

Well, if the search engine is not good enough, there is not much thing to do about it. For example, I think Google is an excellent search engine while Baidu is not, at least in the domain of English. For example, I searched ‘dropbox’ and google gives me exactly what I want but Baidu is a joke.

SEO-ers need to be patient. It won’t take one day or two for your page rank to increase or attract a lot of visitors. However, please remember that ‘Rome’ is not built on one day.

–EOF (The Ultimate Computing & Technology Blog) —

loading...

Last Post: PHP Cache Improvements

Next Post: Select Random SQL