My website steakovercooked.com has been on one of the fasthosts shared-hosting servers. Recently, my site has been disabled many times due to a huge number of requests to my site. These majorly come from bot crawling the site. The Fasthosts IT Operations Engineer Ewan MacDonald mailed me and he said:

Dear justyy

I’m not sure what you are doing with your site exactly but you’ve been consuming over 75% of the available Apache processes. This has caused massive problems for all the other customers on the webserver.

I am running a security scan against your site at the moment.

Please note that your site contains 85,000 amounting to 8.6GB. Our terms state that all files in your webspace must be part of the website so are all 85,000 files part of the site and accessible through the site? If not, they need to be removed please.

I am also going to remove the 2 renamed htdocs folders unless you object?

If your site causes the same performance problem whilst the scan is running I will take it offline again until you can provide an explanation of why it’s tieing up approximately 200 Apache processes.

Best regards,

Then, I checked the apache2 log, and I find lots of these:

[Wed Jul 23 21:40:21 2014] [warn] mod_fcgid: can’t apply process slot for /var/www/fcgi/php54-cgi

[Wed Jul 23 21:40:22 2014] [warn] mod_fcgid: can’t apply process slot for /var/www/fcgi/php54-cgi

[Wed Jul 23 21:40:30 2014] [warn] mod_fcgid: can’t apply process slot for /var/www/fcgi/php54-cgi

[Wed Jul 23 21:40:31 2014] [warn] mod_fcgid: can’t apply process slot for /var/www/fcgi/php54-cgi

[Wed Jul 23 21:40:31 2014] [warn] mod_fcgid: can’t apply process slot for /var/www/fcgi/php54-cgi

[Wed Jul 23 21:40:31 2014] [warn] mod_fcgid: can’t apply process slot for /var/www/fcgi/php54-cgi

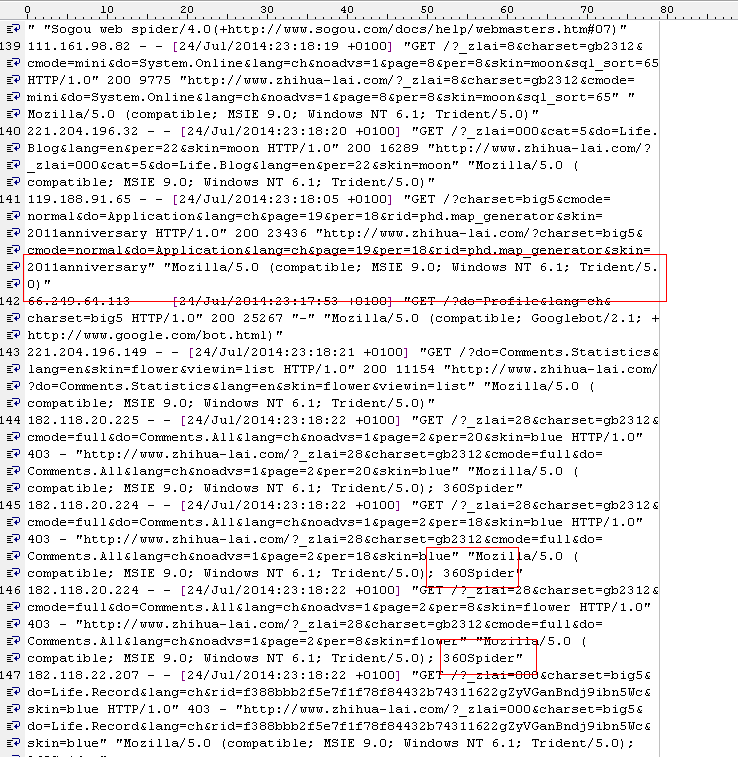

Apparently, it looks like 360spider was hitting the site quite heavily and it obviously affects other websites on the same shared-host, and that is why the fasthosts have to take down my site.

The 360spider problem has returned later so they have had to disable my site again until I have a script ready to block its access as it’s causing issues for other users of the server.

I am sorry that this causes trouble to other share hosts but in my opinion it might be better to block them using a higher level (e.g. apache settings). Just imagine, any other websites may face the same problem. I have optimised my website before in order to reduce the CPU usage by caching them into static HTMLs. but due to quite a number of pages (around 5000 reported in google webmaster), some spiders may not be clever enough to figure out the duplicate. Google spiders are fine because I can configure the parameters and they obey the robots.txt file. But for these angry spiders (e.g. 360, youdao), they don’t actually quite obey the crawling rules.. The only way to ban them is to mark them in the black list (i can do that for sure). but for other users, they may face the same problem.

robots.txt

The robots.txt is a text file under the root of the website that directs the search bots that which directories to index and which are not allowed. But not all of the bots follow the ‘instructions’. Here are the rules I add to tell these bad bots go away.

# root User-agent: * Crawl-Delay: 1 User-agent: * Disallow: /cgi-bin/ Disallow: /tmp/ User-agent: 360Spider Disallow: / User-agent: YoudaoBot Disallow: / User-agent: sogou spider Disallow: / User-agent: YisouSpider Disallow: / User-agent: LinksCrawler Disallow: / User-agent: EasouSpider Disallow: /

.htaccess

The file .htaccess is a text and hidden file at each website directory. It is used by apache re-write module mod_rewrite to make URLs look nicer. It can be also used to control these bots.

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteBase /

RewriteCond %{REQUEST_URI} !^/robots\.txt$

RewriteCond %{REQUEST_URI} !^/error\.html$

RewriteCond %{HTTP_USER_AGENT} EasouSpider [NC,OR]

RewriteCond %{HTTP_USER_AGENT} YisouSpider [NC,OR]

RewriteCond %{HTTP_USER_AGENT} Sogou\ web\ spider [NC]

RewriteCond %{HTTP_USER_AGENT} 360Spider [NC,OR]

RewriteCond %{HTTP_USER_AGENT} LinksCrawler [NC,OR]

RewriteRule ^.*$ - [F,L]

</IfModule>

<IfModule mod_setenvif.c>

SetEnvIfNoCase User-Agent "EasouSpider" bad_bot

SetEnvIfNoCase User-Agent "YisouSpider" bad_bot

SetEnvIfNoCase User-Agent "LinksCrawler" bad_bot

SetEnvIfNoCase User-Agent "360Spider" bad_bot

SetEnvIfNoCase User-Agent "Sogou" bad_bot

Order Allow,Deny

Allow from All

Deny from env=bad_bot

</IfModule>

PHP code

As a safety precaution, I have also put the following code at the index.php which is used to generate different pages according to the URL parameters. 99% website pages are generated using this index file.

$agent='';

if (isset($_SERVER['HTTP_USER_AGENT']))

{

$agent = $_SERVER['HTTP_USER_AGENT'];

}

define('BADBOTS','/(yisouspider|easouspider|yisou|youdaobot|yodao|360|linkscrawler|soguo)/i');

if (preg_match(BADBOTS, $agent)) {

die();

}

Basically, what the above PHP does is to check the HTTP_USER_AGENT string against these bad bots. The preg_match uses regular expression and the option /i specifies case insensitive comparisons.

I have also noticed in the log file, there are quite many entries like this:

119.188.91.121 – – [24/Jul/2014:22:39:51 +0100] “GET /?charset=big5&do=System.Online&lang=ch&page=25&per=10&skin=2011anniversary HTTP/1.0” 200 3919 “https://steakovercooked.com/ … …” “Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)“

From the HTTP_USER_AGENT you normally think it is not a bot, but i think they are. So these bots are very bad. They will actually give whatever USER_AGENT (they can change this value) and they are usually from several IPs (so it is not easy to identify all of them using specific IP ranges).

It seems working after the above methods.

it seems working, because in the apache log, i find lots of these

[Thu Jul 24 23:01:02 2014] [error] [client 61.135.189.186] client denied by server configuration: /home/linweb09/z/steakovercooked.com-1048918357/user/htdocs/

[Thu Jul 24 23:01:02 2014] [error] [client 61.135.189.186] client denied by server configuration: /home/linweb09/z/steakovercooked.com-1048918357/user/htdocs/error

[Thu Jul 24 23:01:08 2014] [error] [client 61.135.189.186] client denied by server configuration: /home/linweb09/z/steakovercooked.com-1048918357/user/htdocs/

And the fasthosts is also happy: “Yup, it’s looking much much better now. so I’ll close off this ticket. Many thanks for your action.”

However, this might not be a final solution… Eventually, I will also move this site to VPS, load balancing servers or dedicated server so that it won’t be taken down because of this stupid reason.

The other day, I have read the following paragraph and I couldn’t agree more on this: The web hosting company should NOT do anything to damage your websites SEO reputations, not to mention, bring your entire site down without your permissions. The fasthosts is just far over the line and that is why fasthosts got so many many bad reviews (something like rubbish, crap, get away for the entire life).

By the way, I am using QuickHostUK, which is simply the best. The VPS works just great and I have already moved a couple of sites over.

–EOF (The Ultimate Computing & Technology Blog) —

loading...

Last Post: Webhosting Review: Disappointing Fasthosts Web hosting, you break my heart

Next Post: The Common Include PHP File For My Sites

All chinese bot is bad?

I’m blocking baiduspider because that aggressive bot came a couple times a day, and my cpu hit 100%.

I think I’ll block all of chinese bot, because that country is not my target market. lol.

Btw, why you’re not block any bot in your robots.txt?

I used to block baidu, 360. Now it is OK since I’ve upgraded my server and I’ve used CloudFlare to firewall any bad requests.

It is OK to block all Chinese bots if you are not targeting the audiences in China.