Naive Bayes is a simple but surprisingly powerful algorithm for predictive modeling.

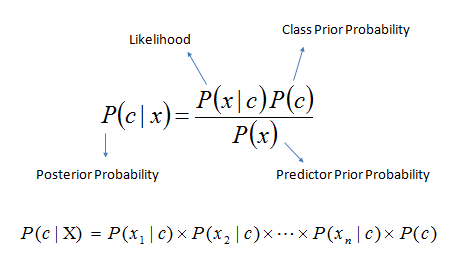

The model is comprised of two types of probabilities that can be calculated directly from your training data:

- The probability of each class.

- The conditional probability for each class given each x value.

Once calculated, the probability model can be used to make predictions for new data using Bayes Theorem.

When your data is real-valued it is common to assume a Gaussian distribution (bell curve) so that you can easily estimate these probabilities.

Naive Bayes is called naive because it assumes that each input variable is independent. This is a strong assumption and unrealistic for real data, nevertheless, the technique is very effective on a large range of complex problems.

–EOF (The Ultimate Computing & Technology Blog) —

GD Star Rating

loading...

223 wordsloading...

Last Post: A Short Introduction: Classification and Regression Trees

Next Post: A Short Introduction to K-Nearest Neighbors Algorithm